PiCAROs (Physical Controllers for Augmented Reality Objects)

When I started learning more about augmented reality, one thing stood out to me: in almost all of these promo videos, there is a person waving their hands around making sweeping gestures in the air. (And that's if the virtual objects are interactable at all. In fact, the most common AR apps I've seen are the ones that basically overlay images on top of magazine ads and the like.)

Interacting with augmented reality objects using gestural control alone -- there something profoundly unsatisfying about that.

Touch matters. Our brains love tactile feedback. Think about how cranking an aluminum volume knob is so much more pleasing than sliding a volume bar on your phone screen.

So why do our brains love tactile stimulation so much? Why do we find it so satisfying?

I believe touch is so satisfying because sensory associations are how we make sense of the world. When we feel the resistance of a volume knob and correlate that with the auditory feedback of the volume increasing, we implicitly understand their relationship. If we also have visual feedback on a computer screen, we have an even more complete understanding of the volume’s range and change rate.

Sensory diversity makes a big difference—a reoccurring theme in neuroscience. Research shows that multisensory input helps us learn more quickly, react faster, and remember longer. And perhaps because our brains are so receptive to it, multisensory engagement also elicits greater satisfaction.

There should be more interactability between physical objects and the digital information overlaid on top of them (aka tangible user interfaces). So I created a few shapes that can be manipulated in different ways to trigger unique AR responses. I call them PiCAROs: physical controllers for augmented reality objects.

dice:

So I made dice:

DIAL

And then a dial, since volume knobs are so satisfying.

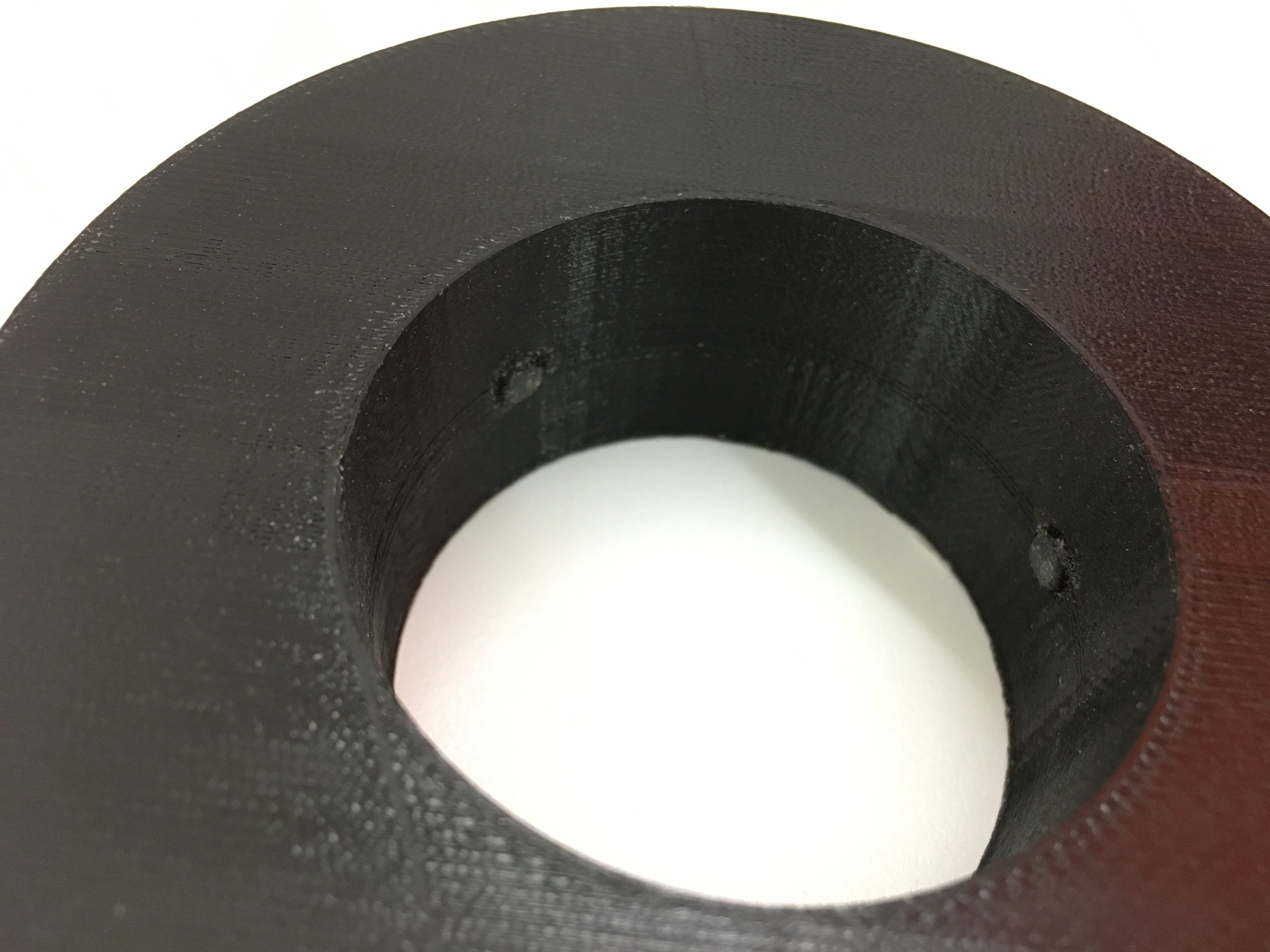

I designed and printed an inner ring, an outer ring, and a piston that is flat on one side and concave on the other. And I took a spring from a mechanical pencil.

The donut has 3 nubs on the inside that will catch on the concave shape of the piston

I had to wrap some tape around the inner ring because the donut wasn't sitting as snugly as I'd hoped

Glued the spring from the mechanical pencil to make my piston complete

I CADed and 3d printed some pieces to make a dial that only rotates the outer circle, while keeping the inner circle stationary. That way, the pattern is constantly changing and can be recognized by the camera.

I needed the dial to rotate/click exactly into the same positions each time because each AR image is assigned to that specific target image. So I put nubs on the inside of the donut and used a spring loaded piston to allow for an easy sliding-catching-slide motion.

The base is also hollow with an opening, so I could fill it with sand or something to weight it down.

This actually didn't work so well when I tested it, so I added some more complex patterns to make them more unique & detectable by the camera (thanks to Brosvision for their awesome Target Marker Generator). It made a big difference! But not enough, unfortunately...

The dial ended up being the least effective of the picaros. Essentially, the app keeps tracking one image even when the dial is rotating, and doesn't update to the new target image fast enough. The dice and hexaflexagon, on the other hand, get a chance to "reset" because the target marker is taken out of the field of view of the camera.

Hexaflexagon

And my personal favorite, the hexaflexagon! If you aren't familiar, a hexaflexagon is basically a flat folded paper that can be flexed and twisted in trippy ways to reveal hidden faces. Check out this super entertaining video from Vi Hart demonstrating how cool they are: https://www.youtube.com/watch?v=VIVIegSt81k

I made a variation called the hexahexflexagon, which has a whopping 15 sides! (It's actually pretty hard to find all 15 sides)

The powerful thing about this project is that with just a smartphone camera, dumb objects can be converted into smart controllers. With the quality of computer vision today, we can now leverage existing, sensorless objects in our environment and overlay them with digital purpose.

AR as seen in many promo videos promises to increase the amount of visual input, but decreases input from the other senses. Perception is indeed visual dominant, but we have so many other senses. And the natural way we gain information from the world is by engaging all of them synergistically; so our tech should engage those senses too!

And the full video compilation:

I applied the concepts in this project to a real application: playing music. To see how I turned music into a tactile experience, click here: AR Playlist.